-

Table of Contents

- Introduction

- The Benefits of Bayesian Regression for Feature Selection

- How to Tune Hyperparameters for Bayesian Regression

- The Benefits of Bayesian Regression for Time Series Analysis

- Comparing Bayesian Regression to Traditional Regression Techniques

- Using Bayesian Regression to Make Predictions with Missing Data

- The Limitations of Bayesian Regression

- Understanding the Role of Priors in Bayesian Regression

- How to Implement Bayesian Regression in Python

- The Advantages of Bayesian Regression for Big Data

- Using Bayesian Regression to Make Predictions with Uncertainty

- Comparing Bayesian Regression to Other Machine Learning Algorithms

- Understanding the Basics of Bayesian Regression

- An Overview of Bayesian Regression and Its Applications

- How Bayesian Regression Can Help Improve Predictive Accuracy

- The Benefits of Bayesian Regression for Machine Learning

- Conclusion

.

“Unlock the Power of Probability with Bayesian Regression: Accurate Predictions for Machine Learning.”

Introduction

Bayesian regression is a powerful machine learning technique that uses probabilistic models to make predictions. It is based on Bayes’ theorem, which states that the probability of an event occurring is equal to the probability of the event given the evidence, multiplied by the prior probability of the event. Bayesian regression uses this theorem to estimate the probability of a given outcome based on prior knowledge and data. This approach is particularly useful for predicting outcomes in complex, non-linear systems, where traditional linear regression techniques may not be able to accurately capture the underlying relationships. Bayesian regression can also be used to identify important features in a dataset and to make more accurate predictions.

The Benefits of Bayesian Regression for Feature Selection

Bayesian regression is a powerful tool for feature selection, which is the process of selecting the most relevant features from a dataset to use in a predictive model. This technique is particularly useful when dealing with datasets that contain a large number of features, as it can help to reduce the complexity of the model and improve its accuracy.

The main benefit of Bayesian regression for feature selection is that it allows for the incorporation of prior knowledge into the model. This means that the model can be informed by existing data and assumptions about the data, which can help to reduce the risk of overfitting and improve the accuracy of the model.

Bayesian regression also allows for the incorporation of uncertainty into the model. This means that the model can take into account the uncertainty associated with the data, which can help to reduce the risk of overfitting and improve the accuracy of the model.

In addition, Bayesian regression can be used to identify important features in a dataset. This is done by calculating the posterior probability of each feature, which is the probability that a feature is important given the data. This can help to identify the most important features in a dataset, which can then be used to build a more accurate predictive model.

Finally, Bayesian regression can be used to identify interactions between features. This is done by calculating the posterior probability of each feature given the other features in the dataset. This can help to identify important interactions between features, which can then be used to build a more accurate predictive model.

Overall, Bayesian regression is a powerful tool for feature selection that can help to reduce the complexity of a model and improve its accuracy. It allows for the incorporation of prior knowledge and uncertainty into the model, as well as the identification of important features and interactions between features. As such, it is an invaluable tool for predictive modeling.

How to Tune Hyperparameters for Bayesian Regression

Tuning hyperparameters for Bayesian regression is an important step in ensuring that the model is able to accurately capture the underlying relationships in the data. The process of tuning hyperparameters involves selecting the optimal values for the parameters that control the model’s behavior. This can be done by using a variety of methods, such as grid search, random search, and Bayesian optimization.

Grid search is a method of hyperparameter tuning that involves systematically searching through a range of values for each parameter. This method is computationally expensive, but it can be effective in finding the optimal values for the parameters.

Random search is a method of hyperparameter tuning that involves randomly sampling from a range of values for each parameter. This method is less computationally expensive than grid search, but it can still be effective in finding the optimal values for the parameters.

Bayesian optimization is a method of hyperparameter tuning that uses Bayesian inference to determine the optimal values for the parameters. This method is more computationally expensive than grid search and random search, but it can be more effective in finding the optimal values for the parameters.

Once the optimal values for the parameters have been determined, the model can be evaluated to determine its accuracy. This can be done by using a variety of metrics, such as mean absolute error, root mean squared error, and R-squared.

By tuning the hyperparameters for Bayesian regression, it is possible to ensure that the model is able to accurately capture the underlying relationships in the data. This can help to improve the accuracy of the model and ensure that it is able to make accurate predictions.

The Benefits of Bayesian Regression for Time Series Analysis

Bayesian regression is a powerful tool for time series analysis that can provide a more accurate and reliable prediction of future values. This method of regression is based on Bayesian probability theory, which is a form of statistical inference that uses prior knowledge to update beliefs about the probability of an event occurring. By incorporating prior knowledge into the analysis, Bayesian regression can provide more accurate predictions than traditional regression methods.

Bayesian regression is particularly useful for time series analysis because it can account for the temporal dependencies between observations. This means that the model can take into account the fact that observations at different points in time are likely to be related. This allows the model to better capture the underlying trends in the data and make more accurate predictions.

Another advantage of Bayesian regression is that it can incorporate prior knowledge about the data. This can be used to inform the model and improve its accuracy. For example, if the analyst knows that certain values are more likely to occur than others, they can incorporate this information into the model. This can help the model to better capture the underlying patterns in the data and make more accurate predictions.

Finally, Bayesian regression is also useful for time series analysis because it can incorporate uncertainty into the model. This means that the model can account for the fact that the future is uncertain and that the predictions may not be 100% accurate. This can help to reduce the risk of making incorrect predictions and improve the accuracy of the model.

Overall, Bayesian regression is a powerful tool for time series analysis that can provide more accurate and reliable predictions. By incorporating prior knowledge and uncertainty into the model, Bayesian regression can help to better capture the underlying patterns in the data and make more accurate predictions.

Comparing Bayesian Regression to Traditional Regression Techniques

Bayesian regression is a statistical technique that uses Bayesian inference to estimate the parameters of a regression model. It is a powerful tool for analyzing data and making predictions. Compared to traditional regression techniques, Bayesian regression offers several advantages.

First, Bayesian regression allows for the incorporation of prior knowledge into the model. This means that the model can be tailored to the specific data set being analyzed, allowing for more accurate predictions. Additionally, Bayesian regression can incorporate uncertainty into the model, which can help to reduce overfitting.

Second, Bayesian regression can be used to estimate the probability of a given outcome. This can be useful for making decisions in uncertain situations. For example, a Bayesian regression model could be used to estimate the probability of a customer making a purchase, which could be used to inform marketing decisions.

Finally, Bayesian regression can be used to estimate the uncertainty of the model itself. This can be useful for assessing the reliability of the model and for determining when it is appropriate to use the model for making predictions.

Overall, Bayesian regression is a powerful tool for analyzing data and making predictions. It offers several advantages over traditional regression techniques, including the ability to incorporate prior knowledge, estimate probabilities, and assess the uncertainty of the model.

Using Bayesian Regression to Make Predictions with Missing Data

Bayesian regression is a powerful statistical technique that can be used to make predictions with missing data. It is based on Bayes’ theorem, which states that the probability of an event occurring is equal to the probability of the event given the data, multiplied by the prior probability of the event.

Bayesian regression works by using prior information about the data to make predictions. This prior information can be used to fill in missing data points. The prior information is used to create a probability distribution for the missing data points, which is then used to make predictions.

The Bayesian regression model is based on the assumption that the data points are independent and identically distributed. This means that the data points are assumed to be randomly distributed and that the same distribution applies to all data points.

The Bayesian regression model also assumes that the data points are normally distributed. This means that the data points are assumed to follow a bell-shaped curve. This assumption allows the model to make more accurate predictions.

The Bayesian regression model is also able to account for uncertainty in the data. This means that the model can make predictions even when there is uncertainty in the data. This is especially useful when making predictions with missing data.

The Bayesian regression model is a powerful tool for making predictions with missing data. It is based on Bayes’ theorem and uses prior information to fill in missing data points. It also assumes that the data points are normally distributed and can account for uncertainty in the data. This makes it a powerful tool for making predictions with missing data.

The Limitations of Bayesian Regression

Bayesian regression is a powerful tool for predictive modeling, but it is not without its limitations. One of the primary limitations of Bayesian regression is that it requires a large amount of data to be effective. This is because Bayesian regression relies on prior knowledge to make predictions, and this prior knowledge is derived from the data. Without enough data, the prior knowledge is not reliable and the predictions will be inaccurate.

Another limitation of Bayesian regression is that it is computationally intensive. This is because the model must be updated with each new data point, which requires a significant amount of computing power. This can be a problem for large datasets, as the computational time can become prohibitively long.

Finally, Bayesian regression is limited by its assumptions. The model assumes that the data is normally distributed, which may not always be the case. If the data is not normally distributed, the model may not be able to accurately predict the outcome. Additionally, the model assumes that the data is independent, which may not always be true. If the data is not independent, the model may not be able to accurately predict the outcome.

Overall, Bayesian regression is a powerful tool for predictive modeling, but it is not without its limitations. It requires a large amount of data to be effective, is computationally intensive, and is limited by its assumptions.

Understanding the Role of Priors in Bayesian Regression

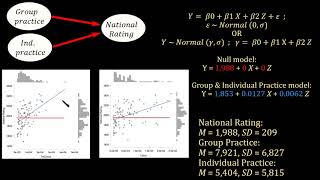

Bayesian regression is a statistical technique that uses Bayesian inference to estimate the parameters of a regression model. It is based on the assumption that the data are generated from a probability distribution that is determined by a set of unknown parameters. The goal of Bayesian regression is to estimate these parameters using prior information about the data and the model.

Priors are an important component of Bayesian regression. A prior is a probability distribution that is used to represent prior knowledge about the parameters of the model. This prior knowledge can be based on previous studies, expert opinion, or other sources of information. The prior distribution is used to inform the estimation of the parameters of the model.

The choice of prior is important because it can have a significant impact on the results of the regression. If the prior is too narrow, it may lead to overfitting, while if it is too broad, it may lead to underfitting. The prior should be chosen carefully to ensure that it accurately reflects the prior knowledge about the data and the model.

In addition to the choice of prior, the strength of the prior can also affect the results of the regression. A strong prior will have a greater influence on the results than a weak prior. The strength of the prior should be chosen carefully to ensure that it does not overly influence the results.

In summary, priors are an important component of Bayesian regression. They are used to represent prior knowledge about the parameters of the model and can have a significant impact on the results of the regression. The choice and strength of the prior should be chosen carefully to ensure that it accurately reflects the prior knowledge about the data and the model.

How to Implement Bayesian Regression in Python

Bayesian regression is a powerful statistical technique that can be used to make predictions and draw inferences from data. It is based on Bayes’ theorem, which states that the probability of an event occurring is equal to the probability of the event given the evidence, multiplied by the prior probability of the event. In Bayesian regression, the prior probability is used to estimate the parameters of the model, and the posterior probability is used to make predictions.

In Python, Bayesian regression can be implemented using the PyMC3 library. PyMC3 is a probabilistic programming library that provides a variety of tools for Bayesian inference. It includes a variety of sampling algorithms, such as Metropolis-Hastings and Hamiltonian Monte Carlo, which can be used to estimate the parameters of the model.

To implement Bayesian regression in Python, the first step is to define the model. This involves specifying the prior distributions for the parameters, as well as the likelihood function. Once the model is defined, the next step is to sample from the posterior distribution using one of the sampling algorithms provided by PyMC3. This will generate a set of samples from the posterior distribution, which can then be used to make predictions.

Finally, the results of the Bayesian regression can be evaluated using a variety of metrics, such as the mean squared error or the log-likelihood. These metrics can be used to compare different models and select the best one for the given data.

In summary, Bayesian regression is a powerful statistical technique that can be used to make predictions and draw inferences from data. It can be implemented in Python using the PyMC3 library, which provides a variety of tools for Bayesian inference. Once the model is defined, the posterior distribution can be sampled using one of the sampling algorithms provided by PyMC3. Finally, the results of the Bayesian regression can be evaluated using a variety of metrics.

The Advantages of Bayesian Regression for Big Data

Bayesian regression is a powerful statistical technique that has become increasingly popular for analyzing large datasets. It is a form of regression analysis that uses Bayesian inference to estimate the parameters of a model. Bayesian regression has several advantages over traditional regression methods when dealing with big data.

First, Bayesian regression is more flexible than traditional regression methods. It allows for the inclusion of prior information about the parameters of the model, which can be used to improve the accuracy of the estimates. This is especially useful when dealing with large datasets, as it can help to reduce the amount of data that needs to be analyzed.

Second, Bayesian regression is more robust to outliers. Traditional regression methods can be sensitive to outliers, which can lead to inaccurate estimates. Bayesian regression is less sensitive to outliers, as it takes into account prior information about the parameters of the model. This makes it more reliable when dealing with large datasets.

Third, Bayesian regression is more efficient than traditional regression methods. It requires fewer data points to produce accurate estimates, which can be beneficial when dealing with large datasets. This can help to reduce the amount of time and resources needed to analyze the data.

Finally, Bayesian regression is more interpretable than traditional regression methods. It provides a more intuitive understanding of the relationships between the variables in the model, which can be useful when dealing with large datasets. This can help to identify important relationships between the variables and can be used to make better decisions.

Overall, Bayesian regression is a powerful tool for analyzing large datasets. It is more flexible, robust, efficient, and interpretable than traditional regression methods, making it an ideal choice for dealing with big data.

Using Bayesian Regression to Make Predictions with Uncertainty

Bayesian regression is a powerful statistical technique that can be used to make predictions with uncertainty. It is based on Bayes’ theorem, which states that the probability of an event occurring is equal to the probability of the event given the evidence, multiplied by the prior probability of the event.

Bayesian regression is a type of regression analysis that uses Bayes’ theorem to estimate the parameters of a model. It is a probabilistic approach to regression, which means that it takes into account the uncertainty associated with the data. Unlike traditional regression methods, which assume that the data is fixed and known, Bayesian regression allows for uncertainty in the data and uses prior information to make predictions.

The Bayesian approach to regression involves specifying a prior distribution for the parameters of the model. This prior distribution is then updated using the data to obtain a posterior distribution. The posterior distribution is then used to make predictions. The advantage of this approach is that it allows for uncertainty in the data and provides a measure of the uncertainty in the predictions.

Bayesian regression can be used in a variety of applications, such as predicting stock prices, forecasting sales, and predicting customer churn. It is particularly useful in situations where there is a lot of uncertainty in the data, such as in financial markets or in customer behavior.

Bayesian regression is a powerful tool for making predictions with uncertainty. It allows for uncertainty in the data and provides a measure of the uncertainty in the predictions. It is a useful tool for a variety of applications, such as predicting stock prices, forecasting sales, and predicting customer churn.

Comparing Bayesian Regression to Other Machine Learning Algorithms

Bayesian regression is a type of machine learning algorithm that is used to predict the value of a target variable based on the values of other variables. It is a powerful tool for predictive modeling and can be used to solve a variety of problems. Compared to other machine learning algorithms, Bayesian regression has several advantages.

First, Bayesian regression is more flexible than other algorithms. It allows for the incorporation of prior knowledge into the model, which can improve the accuracy of the predictions. Additionally, Bayesian regression can be used to estimate the uncertainty of the predictions, which can be useful for decision-making.

Second, Bayesian regression is more robust than other algorithms. It is less sensitive to outliers and can handle missing data better than other algorithms. This makes it a good choice for datasets with a lot of noise or missing values.

Finally, Bayesian regression is more interpretable than other algorithms. It is easier to understand the relationships between the variables and the predictions, which can be useful for understanding the underlying processes.

Overall, Bayesian regression is a powerful tool for predictive modeling and can be used to solve a variety of problems. It is more flexible, robust, and interpretable than other machine learning algorithms, making it a good choice for many applications.

Understanding the Basics of Bayesian Regression

Bayesian regression is a statistical technique that uses Bayesian inference to estimate the parameters of a linear regression model. It is a powerful tool for analyzing data and making predictions.

Bayesian regression is based on Bayes’ theorem, which states that the probability of an event occurring is equal to the probability of the event given the evidence, multiplied by the prior probability of the event. In Bayesian regression, the prior probability is the probability of the model parameters before any data is observed. The posterior probability is the probability of the model parameters after the data is observed.

Bayesian regression uses a prior distribution to represent the uncertainty in the model parameters. This prior distribution is then updated with the observed data to produce a posterior distribution. The posterior distribution is then used to make predictions about the data.

Bayesian regression is a powerful tool for analyzing data and making predictions. It is useful for predicting the outcome of a linear regression model, as well as for understanding the relationships between variables. It can also be used to identify outliers and to detect non-linear relationships.

Bayesian regression is a powerful tool for data analysis and prediction. It is important to understand the basics of Bayesian regression in order to make the most of this powerful technique.

An Overview of Bayesian Regression and Its Applications

Bayesian regression is a statistical technique that uses Bayesian inference to estimate the parameters of a regression model. It is a powerful tool for analyzing data and making predictions. It is used in a variety of fields, including economics, finance, engineering, and medicine.

Bayesian regression is based on Bayes’ theorem, which states that the probability of an event occurring is equal to the probability of the event given the evidence, multiplied by the prior probability of the event. In Bayesian regression, the prior probability is the probability of the model parameters given the data. The posterior probability is the probability of the model parameters given the data and the prior probability.

Bayesian regression is used to estimate the parameters of a regression model. It is a powerful tool for analyzing data and making predictions. It is used in a variety of fields, including economics, finance, engineering, and medicine.

In Bayesian regression, the prior probability is used to determine the model parameters. This prior probability is based on prior knowledge about the data and the model parameters. The posterior probability is then used to update the model parameters based on the data.

Bayesian regression can be used to estimate the parameters of a linear regression model, a logistic regression model, or a nonlinear regression model. It can also be used to estimate the parameters of a time series model.

Bayesian regression can be used to make predictions about future events. It can also be used to identify relationships between variables. It can also be used to identify outliers in the data.

Bayesian regression is a powerful tool for analyzing data and making predictions. It is used in a variety of fields, including economics, finance, engineering, and medicine. It is a useful tool for understanding the relationships between variables and making predictions about future events.

How Bayesian Regression Can Help Improve Predictive Accuracy

Bayesian regression is a powerful statistical technique that can be used to improve the accuracy of predictive models. It is based on Bayesian probability theory, which is a branch of mathematics that deals with the probability of events occurring based on prior knowledge. Bayesian regression uses prior knowledge to inform the model and make more accurate predictions.

Bayesian regression works by incorporating prior knowledge into the model. This prior knowledge can be in the form of prior distributions, which are probability distributions that represent the expected values of the model parameters. By incorporating prior knowledge into the model, Bayesian regression can better capture the underlying structure of the data and make more accurate predictions.

Bayesian regression also allows for more flexibility in the model. It allows for the inclusion of additional variables that may not have been included in the original model. This can help improve the accuracy of the predictions by allowing the model to better capture the underlying structure of the data.

Finally, Bayesian regression can also help reduce overfitting. Overfitting occurs when a model is too complex and captures too much of the noise in the data. By incorporating prior knowledge into the model, Bayesian regression can help reduce overfitting and improve the accuracy of the predictions.

Overall, Bayesian regression is a powerful tool that can be used to improve the accuracy of predictive models. By incorporating prior knowledge into the model, allowing for more flexibility, and reducing overfitting, Bayesian regression can help improve the accuracy of predictions and provide more reliable results.

The Benefits of Bayesian Regression for Machine Learning

Bayesian regression is a powerful tool for machine learning that can be used to make predictions and decisions based on data. It is a type of statistical modeling that uses Bayesian inference to estimate the parameters of a model. Bayesian regression is a powerful tool for machine learning because it allows for the incorporation of prior knowledge into the model, which can improve the accuracy of predictions. Additionally, Bayesian regression can be used to identify important features in the data and to identify relationships between variables.

Bayesian regression is based on Bayes’ theorem, which states that the probability of an event occurring is equal to the probability of the event given the prior knowledge multiplied by the probability of the prior knowledge. This means that Bayesian regression can incorporate prior knowledge into the model, which can improve the accuracy of predictions. Additionally, Bayesian regression can be used to identify important features in the data and to identify relationships between variables.

Bayesian regression can also be used to make decisions based on data. This is because Bayesian regression can be used to calculate the probability of an event occurring given the data. This can be used to make decisions about which action to take in a given situation. For example, a Bayesian regression model could be used to determine the probability of a customer buying a product given their past purchases. This information could then be used to decide which products to promote to the customer.

Overall, Bayesian regression is a powerful tool for machine learning that can be used to make predictions and decisions based on data. It allows for the incorporation of prior knowledge into the model, which can improve the accuracy of predictions. Additionally, Bayesian regression can be used to identify important features in the data and to identify relationships between variables. Finally, it can be used to make decisions based on data by calculating the probability of an event occurring given the data.

Conclusion

Bayesian regression is a powerful and versatile tool for machine learning prediction. It provides a probabilistic approach to predicting outcomes, allowing for uncertainty and variability in the data. It is a useful tool for data scientists and machine learning practitioners, as it can be used to make predictions with greater accuracy and confidence. Bayesian regression can also be used to identify important features in a dataset, and to identify relationships between variables. With its ability to incorporate prior knowledge and to account for uncertainty, Bayesian regression is an invaluable tool for machine learning prediction.